- Home

- >

- DevOps News

- >

- 6 Ways Top Organizations Test Software – InApps Technology 2022

6 Ways Top Organizations Test Software – InApps Technology is an article under the topic Devops Many of you are most interested in today !! Today, let’s InApps.net learn 6 Ways Top Organizations Test Software – InApps Technology in today’s post !

Read more about 6 Ways Top Organizations Test Software – InApps Technology at Wikipedia

You can find content about 6 Ways Top Organizations Test Software – InApps Technology from the Wikipedia website

Cynthia Dunlop

Cynthia has been writing about software development, testing and enterprise automation for much longer than she cares to admit. She’s currently director of content and customer marketing at Tricentis.

Tricentis just released its first How the World’s Top Organizations Test report, which analyzes how industry leaders test the software that their business and the world relies on. For this first-of-its-kind report, we interviewed 100 quality leaders at Fortune 500 or global equivalent organizations and major government agencies across the Americas, Europe and Asia-Pacific.

Although there is no shortage of reports on overall software testing trends, the state of testing at the organizational level, particularly at “household name” brands, has historically been a black box. On the one hand, large organizations often have access to resources far beyond the reach of smaller businesses such as commercial as well as open source software, access to consultants and services, etc. But on the other hand, they face daunting challenges, such as:

- Complex application stacks that involve an average of 900 applications. Single transactions touch an average of 82 different technologies, ranging from mainframes and legacy custom apps to microservices and cloud native apps.

- Deeply entrenched manual testing processes that were designed for waterfall delivery cadences and outsourced testing, not agile, DevOps, and the drive towards “continuous everything.”

- Demands for extreme reliability. Per IDC, an hour of downtime in enterprise environments can cost from $500,000 to $1 million. “Move fast and break things” is not an option in many industries.

How do these pressures affect quality processes on the ground? The following six data points shed light on how enterprise quality leaders approach core challenges related to test case design, automation, measurement and reporting.

6 Key Data Points

1. How do you determine where to apply test automation?

It’s typically not feasible, or even desirable, to automate every test scenario. How do organizations determine where to start and what to focus on? Common approaches include:

- Business impact: Prioritize the applications that are most important to the business.

- Effort savings: Prioritize what’s consuming the most testing resources.

- Frequency of updates: Prioritize the applications that release most frequently.

- Technical feasibility: Prioritize what’s simplest to automate given the available tools, people and processes.

- Application maturity: Prioritize more stable applications vs. those that are still evolving significantly.

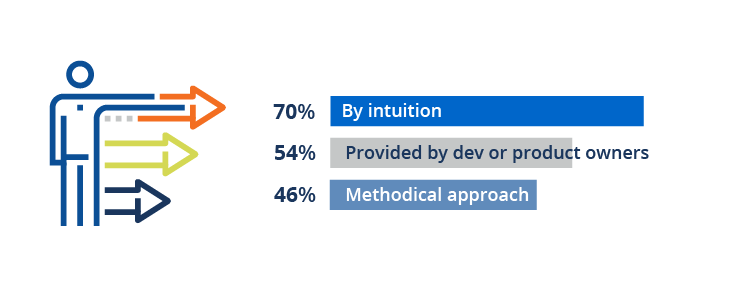

2. How do you approach test design and creation?

Designing the right tests is important for achieving the maximum impact as efficiently as possible. It also aids in debugging and reduces the burden of test maintenance. Common test design approaches include:

- By intuition: Testers rely on their own intuition and experience. They use their understanding of the application and business process to create a comprehensive testing suite that covers “happy paths,” negative paths and edge cases.

- Provided by dev or product owners: The developer or product owner who defined the requirement tells the testing team the functionality to test and might even define the steps.

- Methodical approach: Testers use industry-standard test case design methodologies — like pairwise, orthogonal, or linear expansion — to ensure high requirements coverage.

3. How do you measure test suite coverage?

Test coverage is often used to determine when enough testing has been completed. The ways to measure coverage vary dramatically and using different measurements can yield dramatically different levels of confidence. Test coverage is often measured by:

- Requirements coverage: Tests are correlated to requirements and all requirements are treated equally. Covering half the requirements would yield 50% requirements coverage, whether those requirements were business-critical or trivial.

- Business risk coverage: Requirements are weighted according to the business risk they represent, then tests are measured based on the risk coverage they achieve. You could feasibly achieve 75% business risk coverage by testing just 15% of your requirements — or end up testing 90% of your requirements, but achieving only 50% business risk coverage.

- Number of test cases: Some teams are incentivized based on creating a certain number of tests. Focusing on quantity vs. quality can result in redundant tests and a test suite that’s difficult to maintain.

4. Which of the following metrics on the business impact of testing do you track and report on?

Organizations tend to measure business impact in terms of the so-called iron triangle of quality, cost and speed. In terms of testing, they track and report on:

- Defects prevented: How testing optimization exposes more issues prior to release.

- Cost savings: How testing optimization frees up resources that can be allocated for other tasks, plus the cost avoidance from exposing issues prior to release.

- Speed to market: How testing optimization enables the team to release faster with confidence.

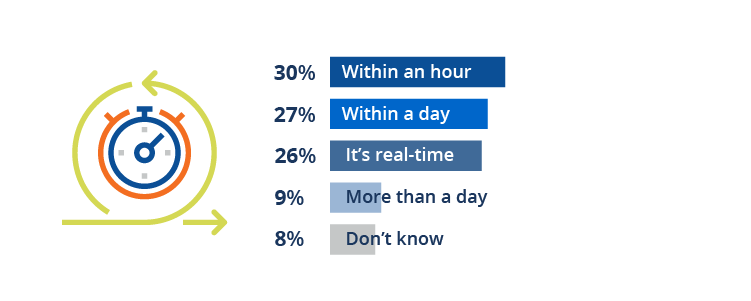

5. How long does it take you to generate the quality reports you need?

This question assesses how long it takes QA leaders to generate the reports they need to understand the health and quality of their applications. This is not necessarily the output of any given tool and often requires some manual effort correlating results from multiple tools.

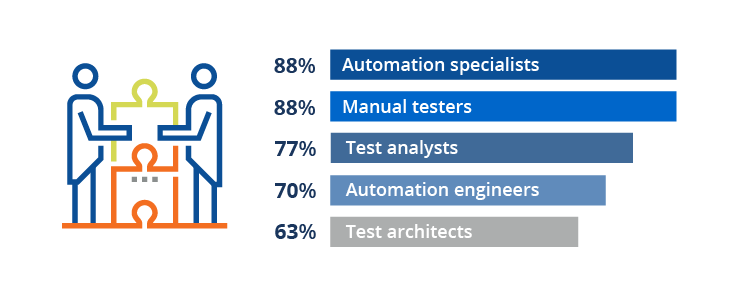

6. What QA roles do you have in your team (or available to support your team)?

Key testing roles seen across the most mature organizations include:

- Automation specialists who automate tests based on the test strategy set by the team.

- Manual testers who create, maintain and execute manual tests, including exploratory tests.

- Test analysts who ensure that the proper test cases are created and identify additional gaps that should be covered.

- Automation engineers who typically support automation specialists through building frameworks or extending tools to simplify automation efforts.

- Test architects who understand the overall test strategy and are responsible for ensuring that the team follows best practices around tools and processes.

More Findings on How Top Organizations Test

To learn more about how industry leaders test their software, read the complete benchmark report. You’ll learn:

- How leaders and laggards differ on key software testing and quality metrics.

- Where most organizations stand in terms of CI/CD integration, test environment strateg, and other key process elements.

- What test design, automation, management and reporting approaches are trending now.

- Organizations’ top priorities for improving their testing over the next six months.

InApps Technology is a wholly owned subsidiary of Insight Partners, an investor in the following companies mentioned in this article: Tricentis.

Lead image via Pixabay.

Source: InApps.net

Let’s create the next big thing together!

Coming together is a beginning. Keeping together is progress. Working together is success.