- Home

- >

- DevOps News

- >

- What Is Data Observability and Why does it matter?

What Is Data Observability and Why does it matter?

In 2021, most businesses’ websites, pitch decks, and job descriptions lay claim to their identities as data-driven companies. Behind this desirable label, you’ll usually find an admirable goal—and not much else. Well, beyond an awful lot of unused data.

Well-meaning companies are collecting and storing data, but don’t allow it to actually drive their operations. We’ve found this is often due to a complete, and not unwarranted, lack of trust in the data itself.

As companies gather seemingly endless streams of data from a growing number of sources, they begin to amass an ecosystem of data storage, pipelines, and would-be end users. With every additional layer of complexity, opportunities for data downtime — moments when data is partial, erroneous, missing, or otherwise inaccurate — multiply.

Forrester estimates that data teams spend upwards of 40% of their time on data quality issues instead of working on revenue-generating activities for the business. And too many data engineers know the pain of that panicked phone call — the numbers in my dashboard are all wrong. I have a presentation in an hour. What happened to our data?

At this point, unreliable data is an expected inconvenience. It’s a pain, sure, but it’s also a problem for almost every company on a regular basis. And it’s hard to let something you don’t trust drive your company’s decision-making, let alone your product.

What Data Engineering Teams Can Learn from DevOps

Twenty years ago, when a company’s website or application went down or became unavailable, it was considered par for the course. Today, if Slack, Twitter, or LinkedIn suffers an outage, it makes national headlines. Online applications have become mission-critical to almost every business, and companies invest appropriately in avoiding service interruptions.

That’s how far application engineering has come in the last several years. And that’s precisely where data engineering needs to go.

We predict that as companies increasingly rely on and invest in data as a key business driver, instances of broken pipelines, missing fields, null values, and the like will go from a tolerated inconvenience to inconceivably rare.

Executives won’t have their data team leads on speed-dial for troubleshooting when an important dashboard looks out of whack — because it won’t happen. Over the next decade, we’ll reach a new level of data reliability. And we’ll get there by following in the footsteps of application engineers to develop our own specialty of DataOps.

Already, innovative data teams at leading companies like GitLab are applying best practices of DevOps to their data pipelines and processes. These teams are investing in tooling, developing data-specific SLAs, and delivering on the high level of data health their companies need to flourish.

As we see more data teams across more companies make similar strides, we’ll enter the new frontier of data engineering: preventing data downtime from happening in the first place. This will be accomplished as more companies prioritize data observability.

Why Data Observability: The Next Frontier of Data Engineering

At its simplest, data observability means maintaining a constant pulse of the health of your data systems by monitoring, tracking, and troubleshooting incidents to reduce — and eventually prevent — downtime.

Data observability shares the same core objective as application observability: minimal disruption to service. And both practices involve testing as well as monitoring and alerting when downtime occurs. That said, there are a few key differences between data and application observability.

Application observability answers questions like, What services did a request go through? Where were the performance bottlenecks? Why did this request fail?

Data observability should tell you, What are my most critical data sets, and who uses them? Why did 5,000 rows turn into 50? Why did this pipeline fail?

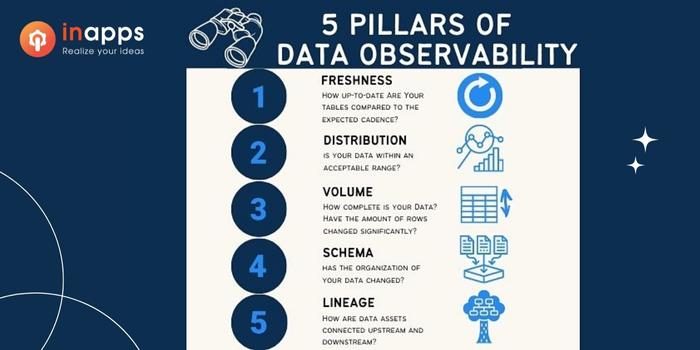

While application observability is centered around three major pillars — metrics, logs, and traces — data engineers can refer to five pillars of data observability. We need to understand:

- Freshness: How up-to-date are your data tables?

- Distribution: Do your data values fall within an accepted range?

- Volume: Are your data tables complete?

- Schema: Who made what changes to your data, and when?

- Lineage: The full picture of your data landscape, including upstream sources, downstream ingestors, and who interacts with your data at which stages.

Additionally, unlike most software applications, your data must be monitored at-rest, without leaving your environment, to keep it secure. In the ideal scenario, data observability tooling shouldn’t ever require access to your individual records or sensitive information — relying instead on metadata and query logs to understand your data lineage and measure its reliability.

When data observability is reached, data teams will spend less time firefighting. More employees may become data consumers, knowing where and how to access data to inform their work. And executives can finally learn to stop second-guessing analytics.

The Future of DataOps and Data Observability

Achieving data observability isn’t easy. But just like DevOps in the early 2010s, DataOps will become increasingly critical in this fledgling decade.

We predict that within the next five years, all data engineering teams will bake observability into their strategy and tech stack. And more companies will earn that data-driven moniker as healthy data is trusted and put to good use, powering technology and decision-making alike.

FAQs

What is data observability?

“Data observability” is the blanket term for understanding the health and the state of data in your system. Essentially, data observability covers an umbrella of activities and technologies that, when combined, allow you to identify, troubleshoot, and resolve data issues in near real-time.

Why observability matters?

Observability is important because it gives you greater control over complex systems. Simple systems have fewer moving parts, making them easier to manage. Monitoring CPU, memory, databases and networking conditions is usually enough to understand these systems and apply the appropriate fix to a problem.

Lastly, if ever you feel the need to learn more about technologies, frameworks, languages, and more – InApps will always have you updated.

List of Keywords users find our article on Google

Let’s create the next big thing together!

Coming together is a beginning. Keeping together is progress. Working together is success.